Next-gen Touchscreens

With Edge AI

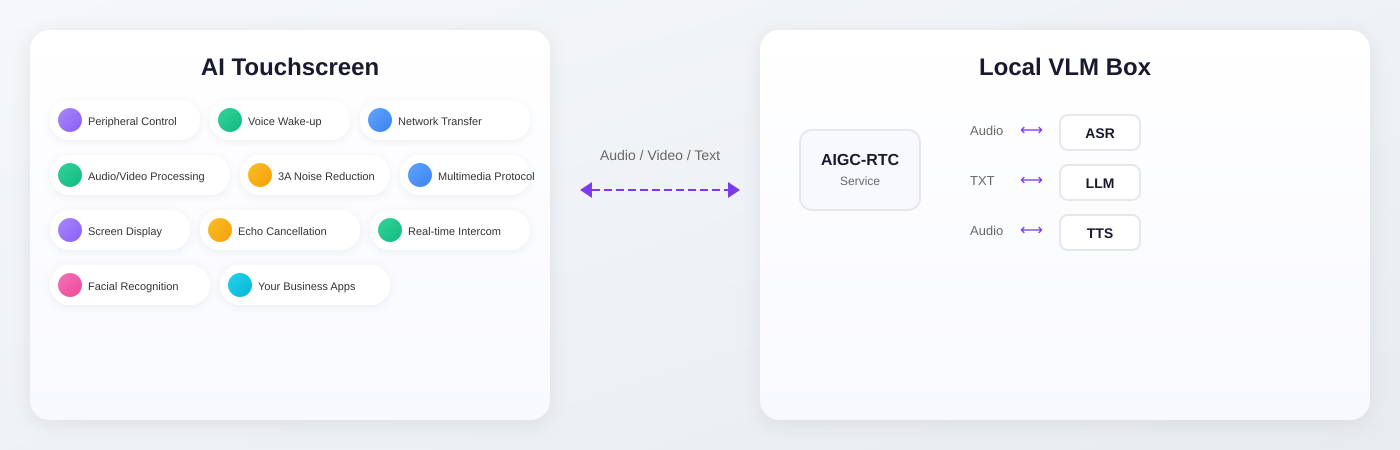

Intelligent touchscreens integrated with locally hosted VLM to achieve low-latency, high-privacy AIoT experience. Expansion Ready to fit your needs.